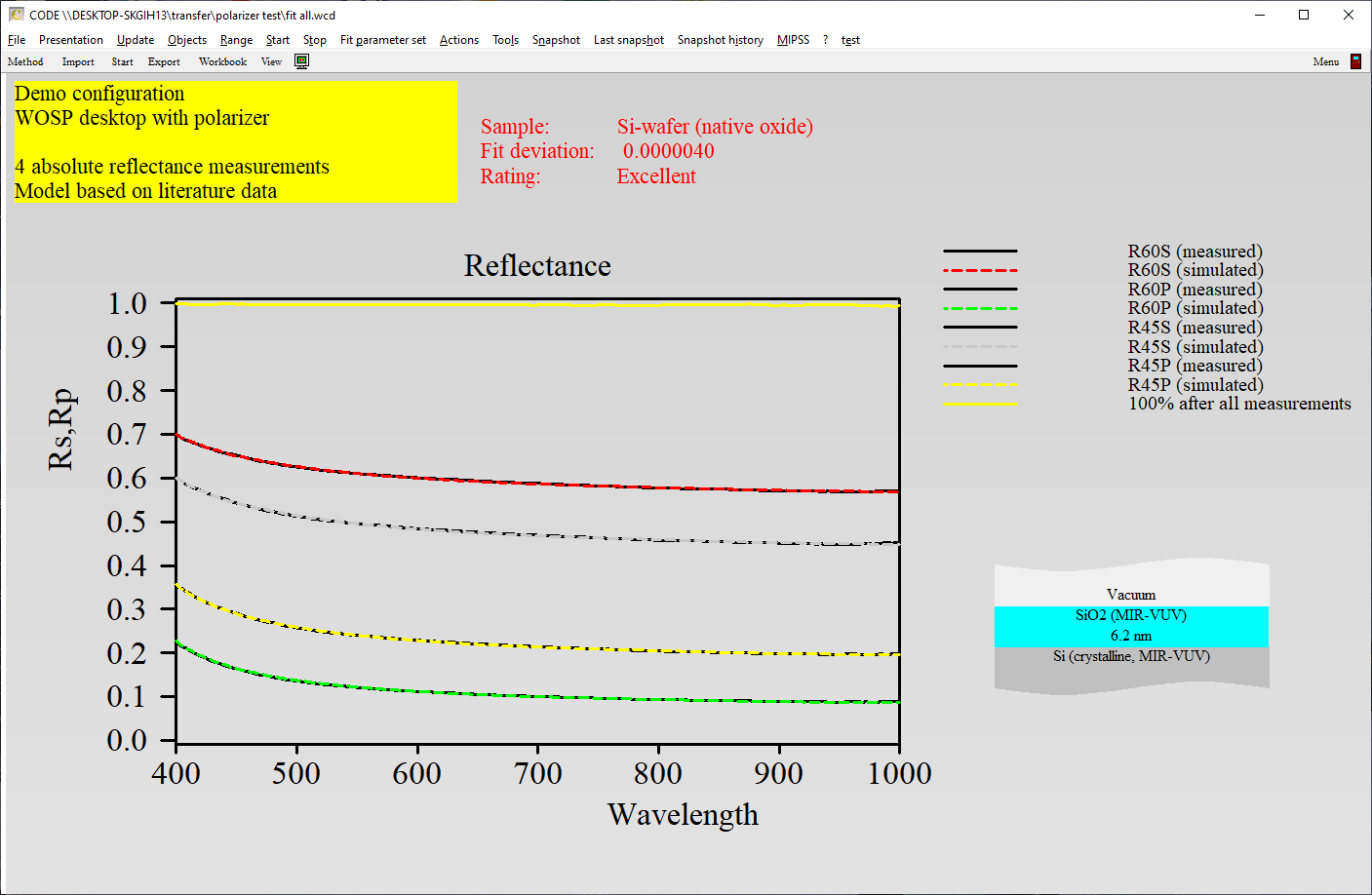

Answering some recent questions about optical modelling, I dug out some old slides. They explain some model improvements that were implemented a long time ago. Combined with today’s fast computer hardware they provide the basis of high quality modelling.

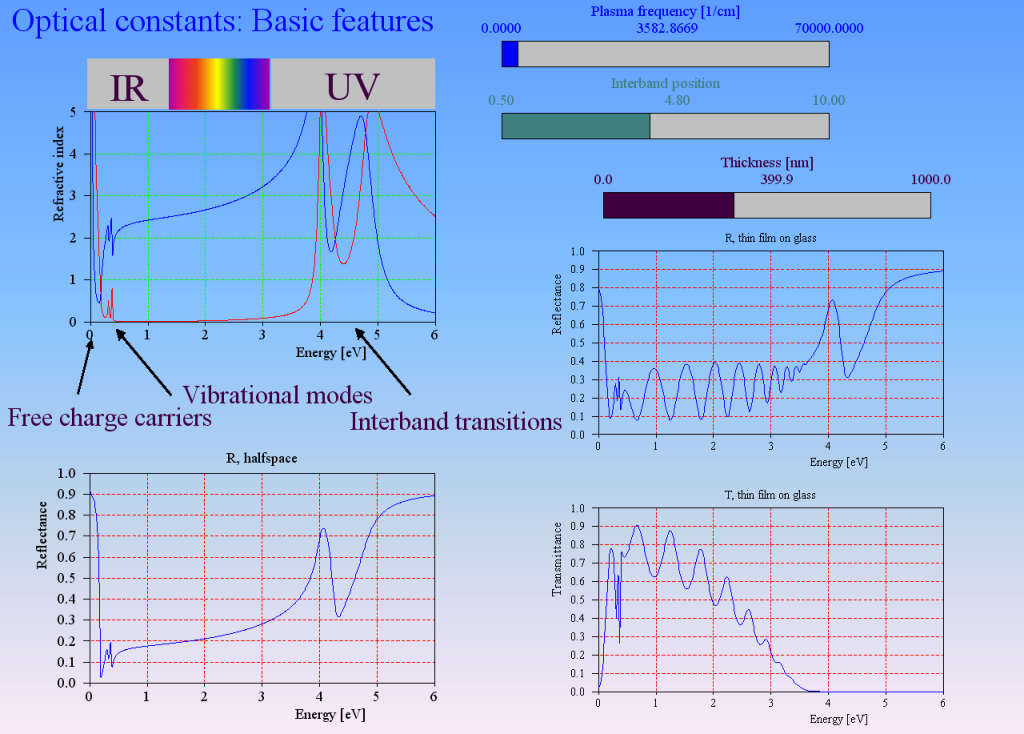

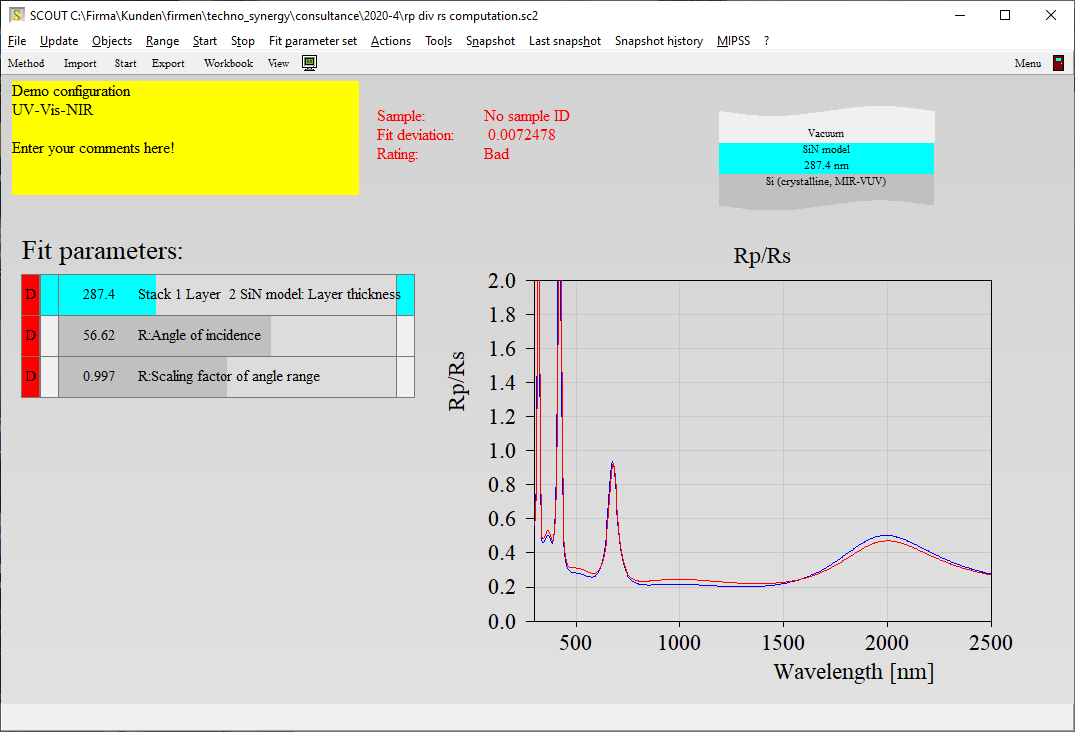

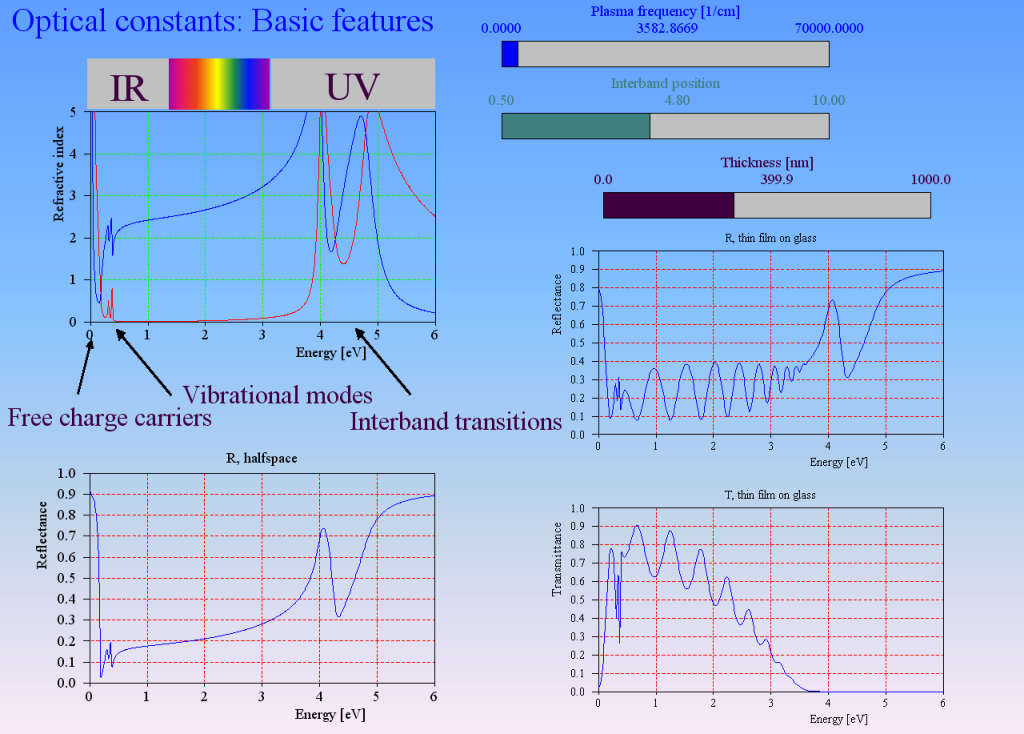

Optical constant models describe the response of matter to the electric fields of a light wave. The main mechanisms are

- acceleration of free charge carriers in conductive materials (free charge carrier absorption)

- exciting oscillations of bound charges (vibrational modes)

- lifting up electrons from an occupied electronic state to an empty state (interband transitions)

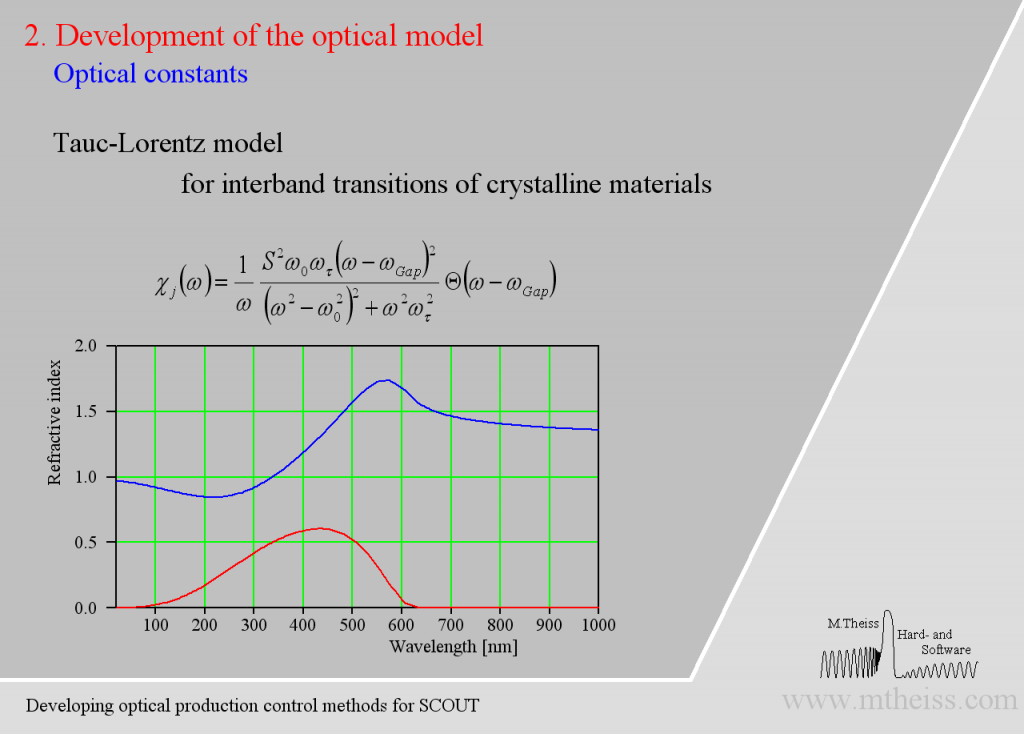

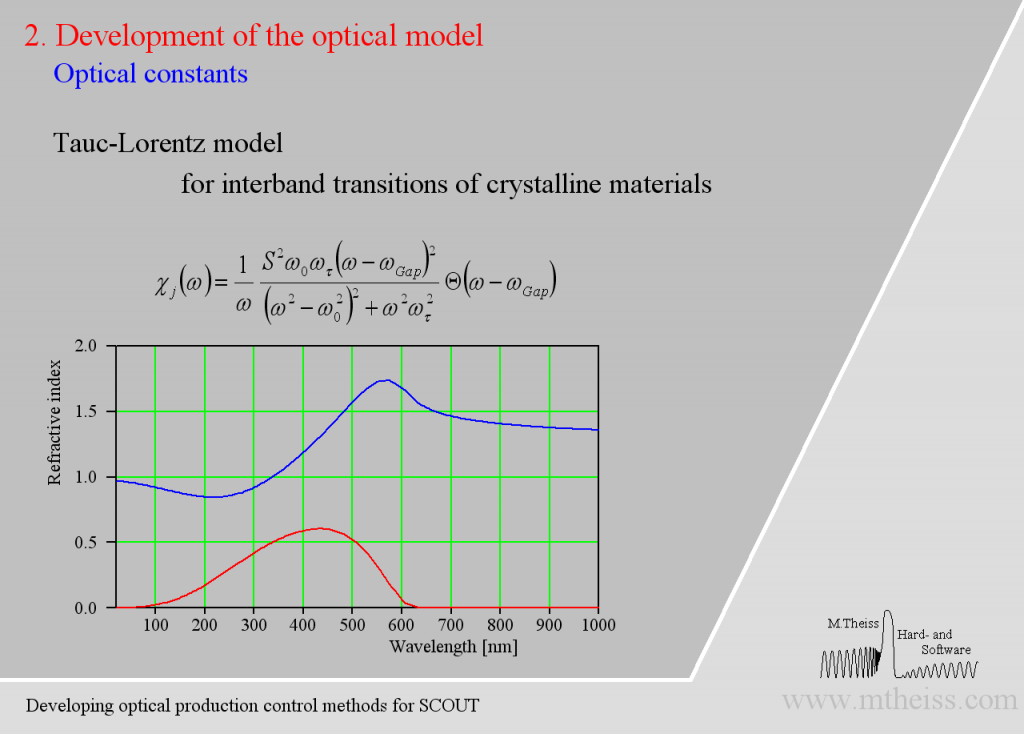

Interband transitions

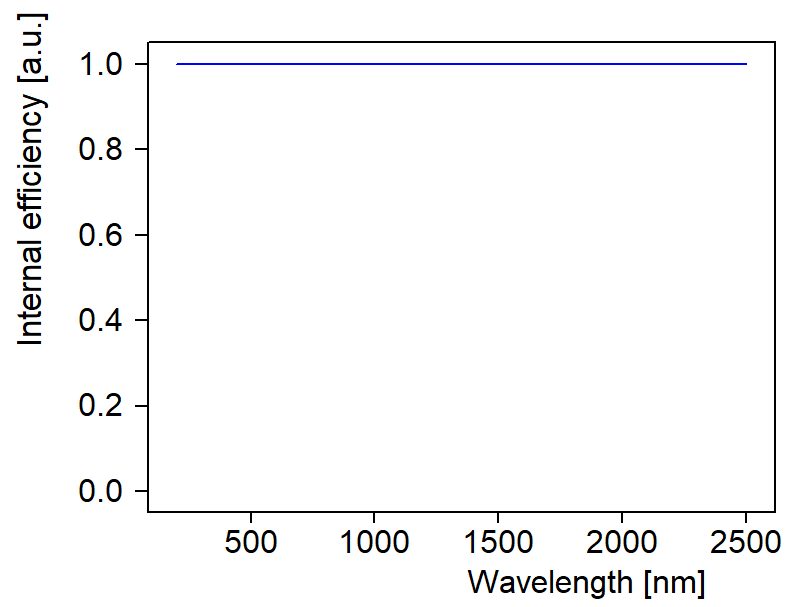

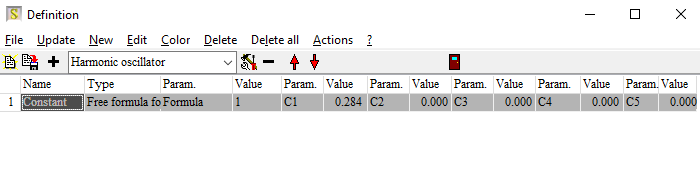

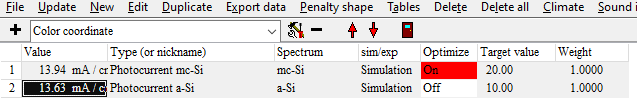

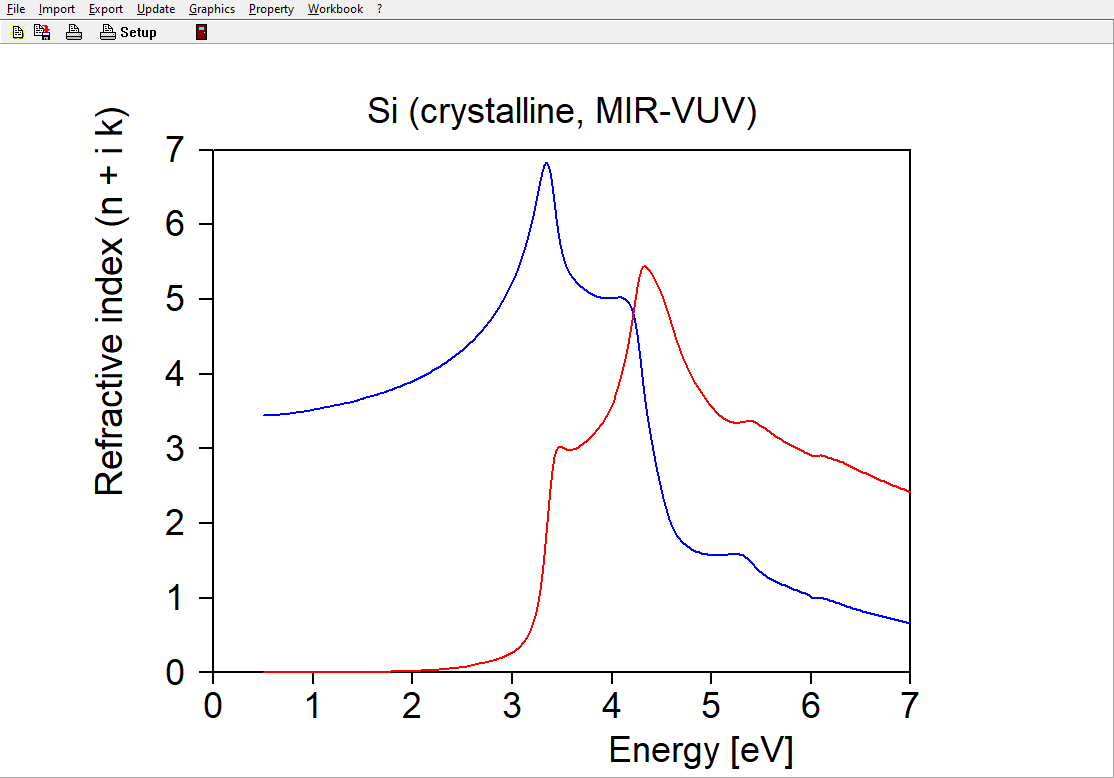

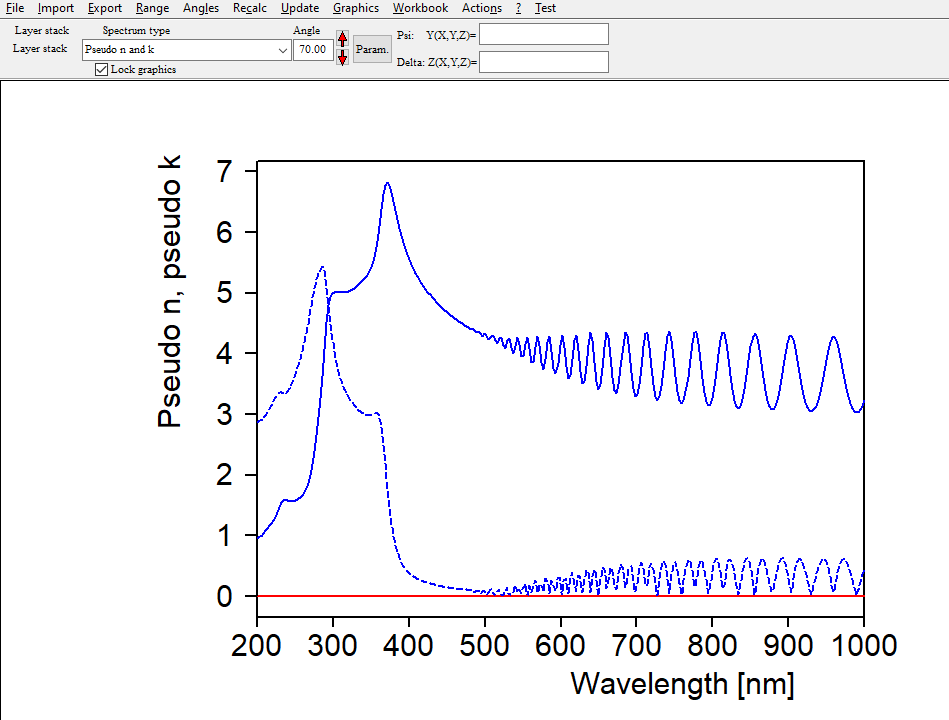

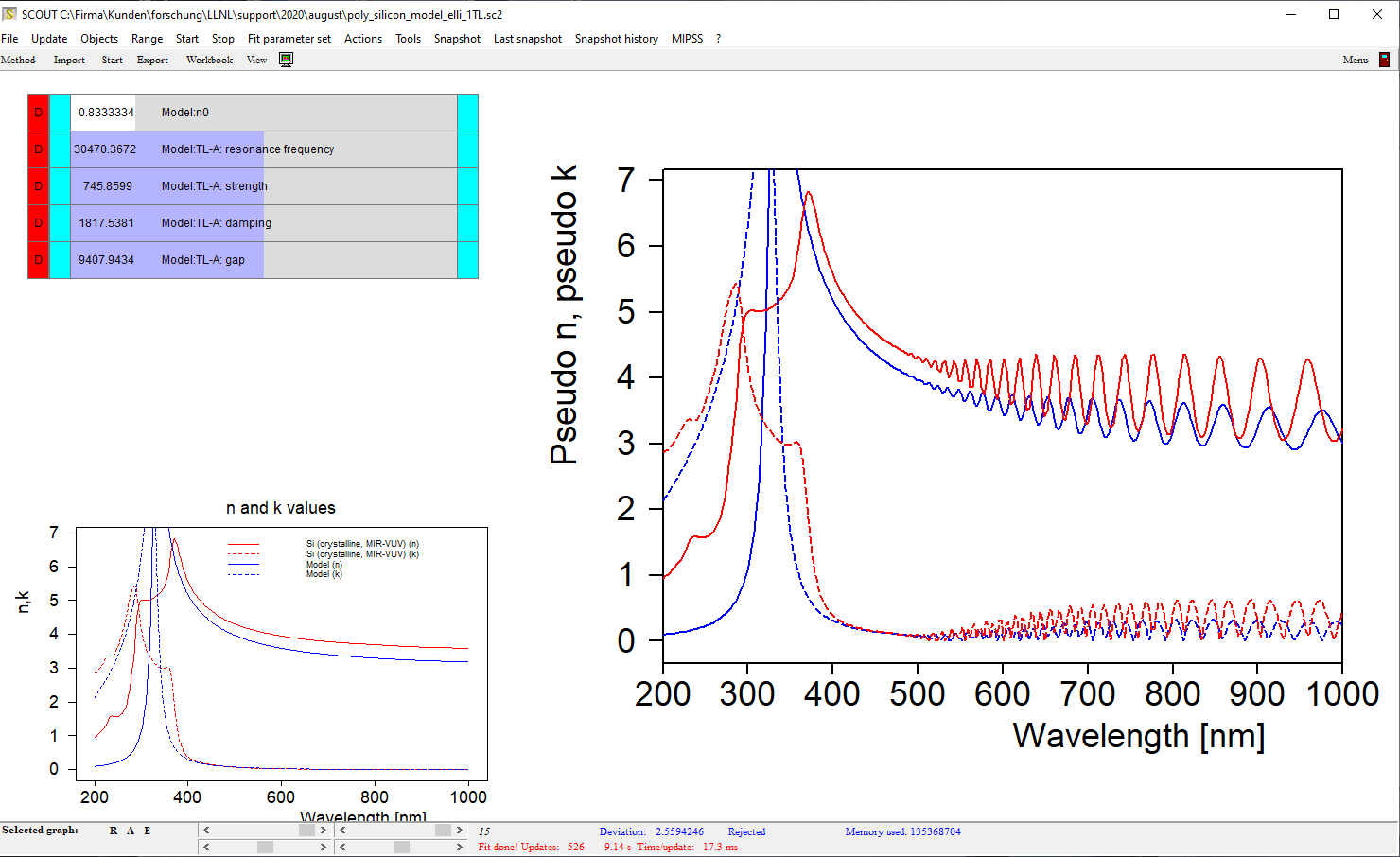

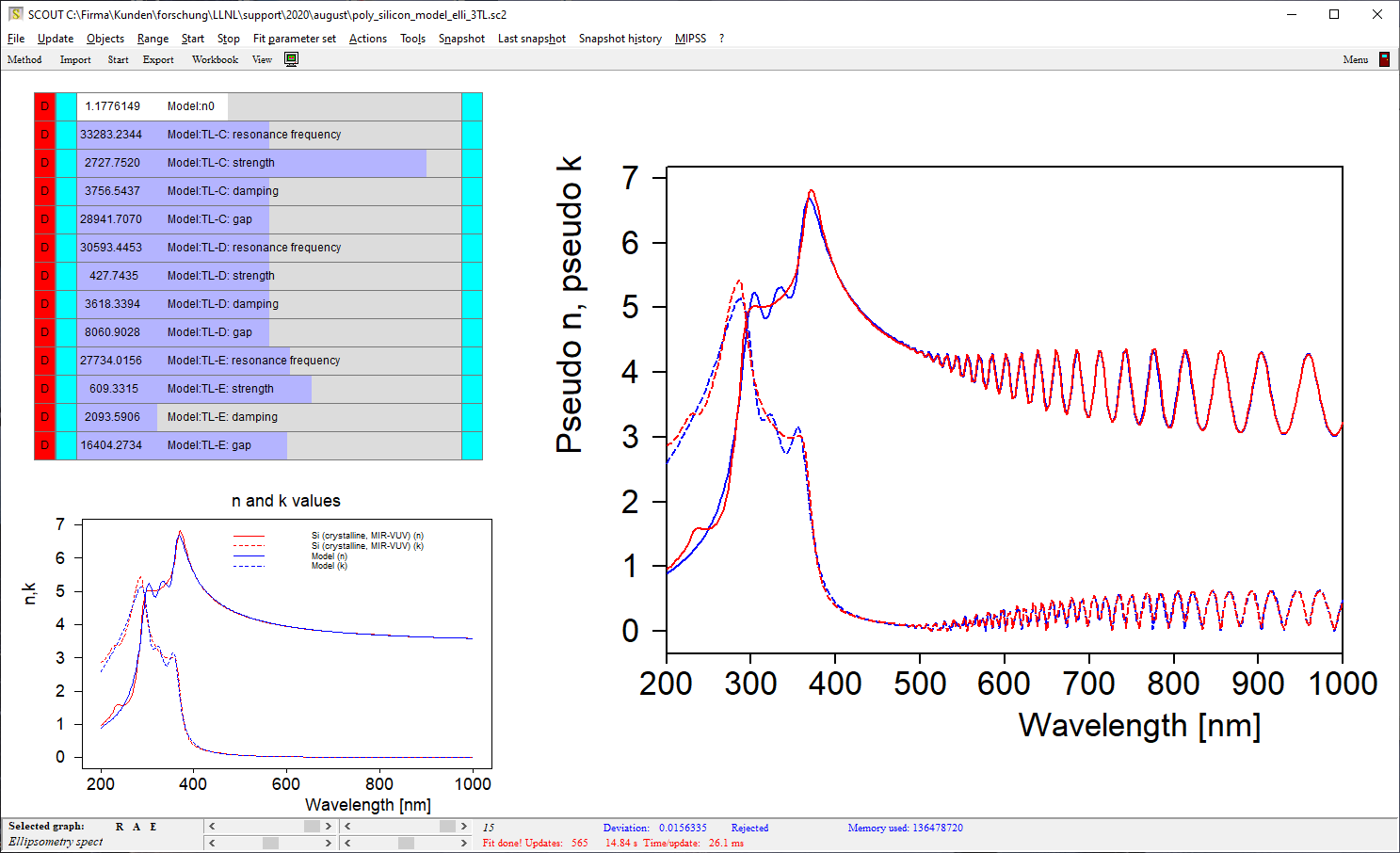

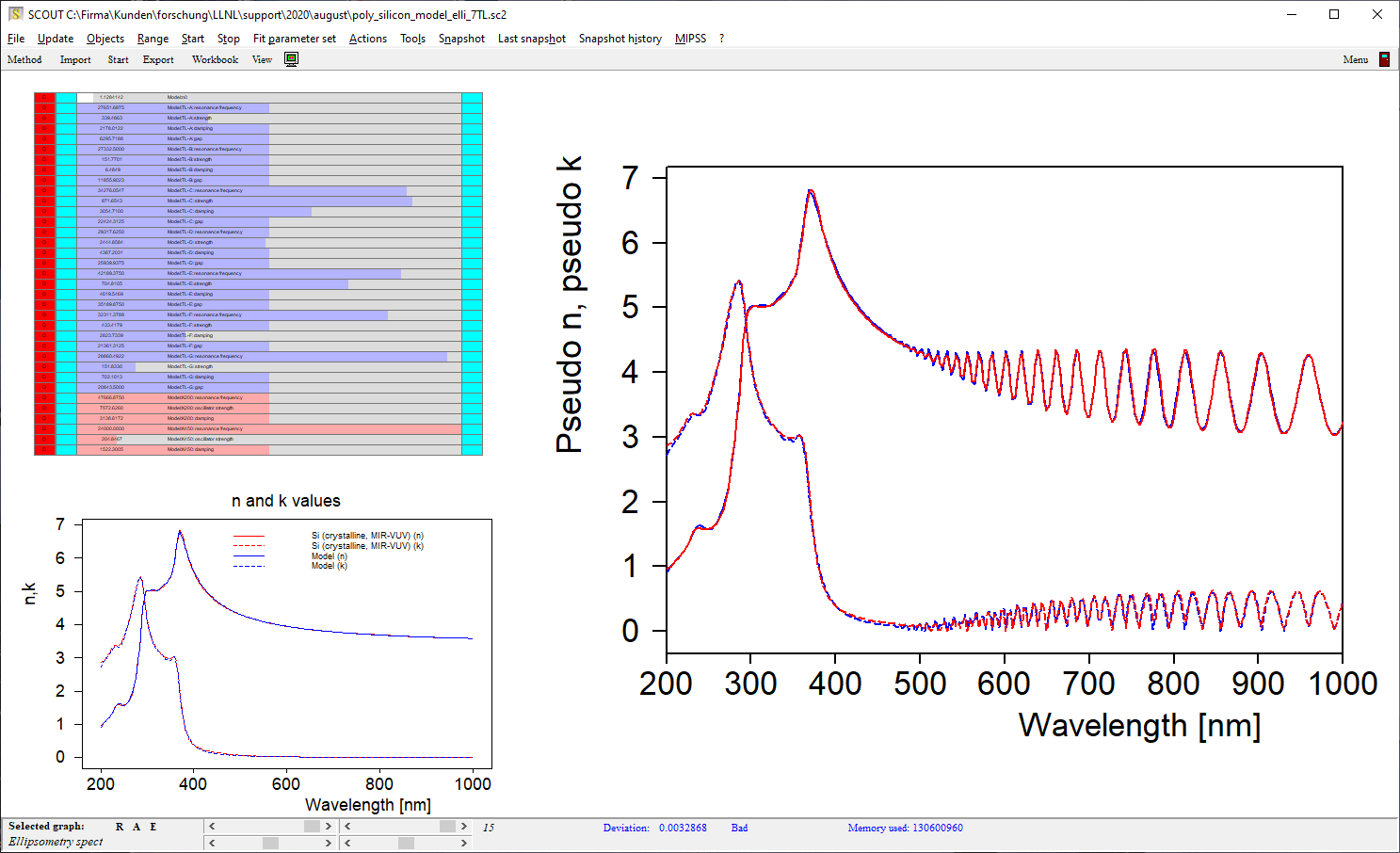

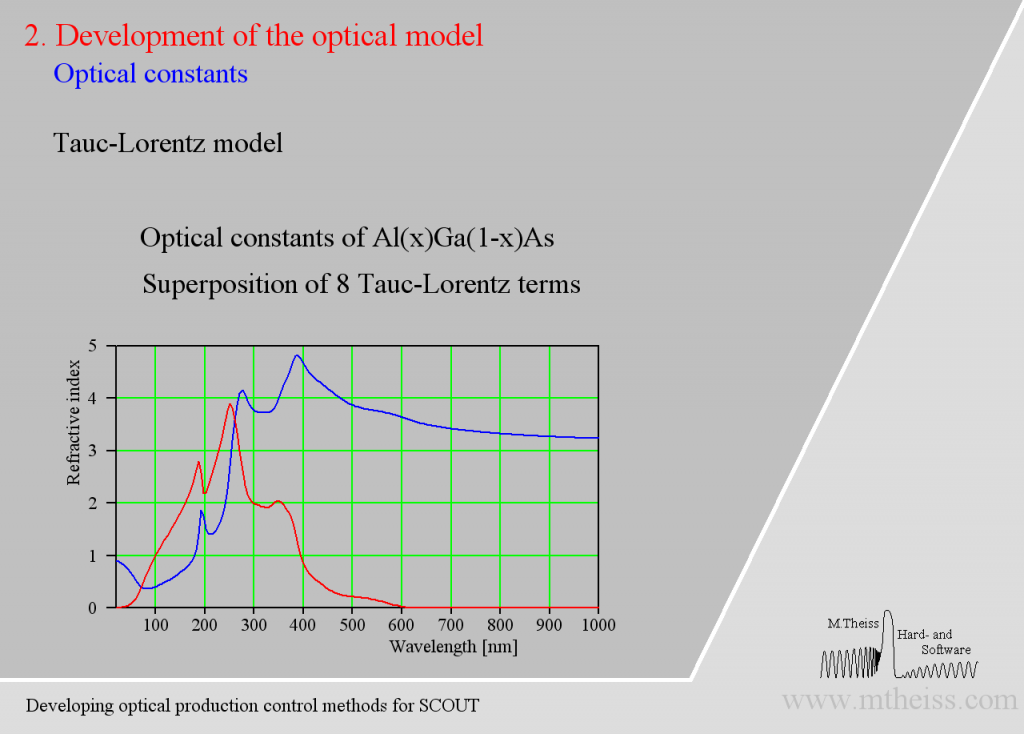

Electronic transitions can be roughly described by simple harmonic oscillators. However, these are not very realistic and should be used far away from the resonance frequency of the oscillator only. For band transitions of crystalline materials the Tauc-Lorentz model is appropriate:

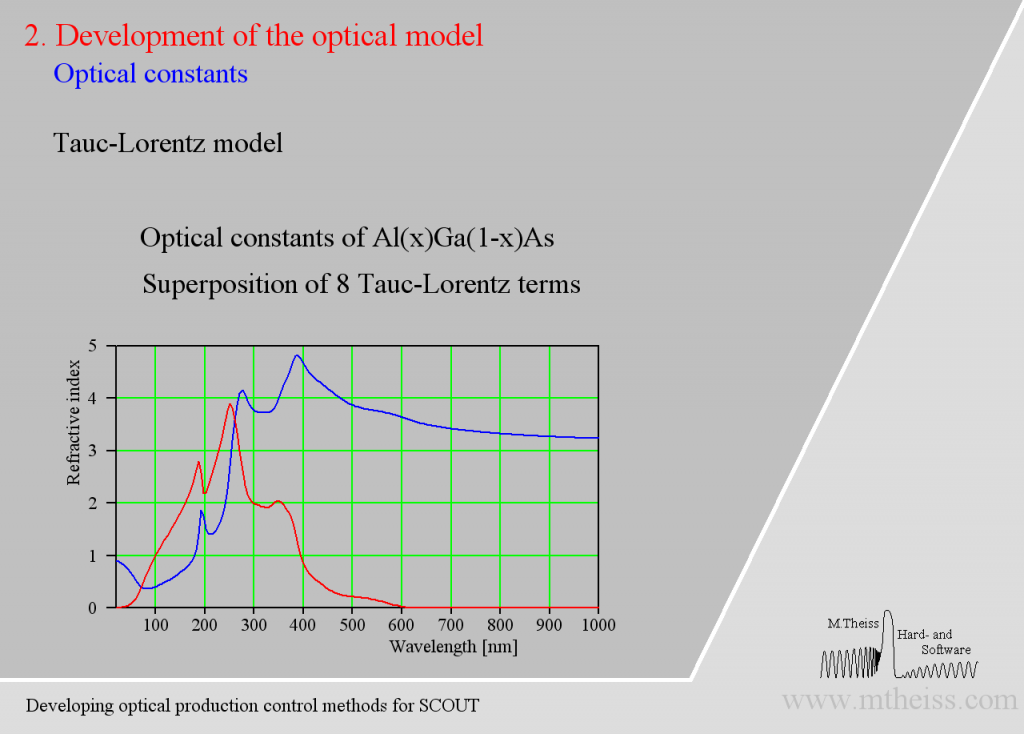

Be prepared to superimpose several of these terms since crystalline materials may have feature many interband transitions, like AlGaAs:

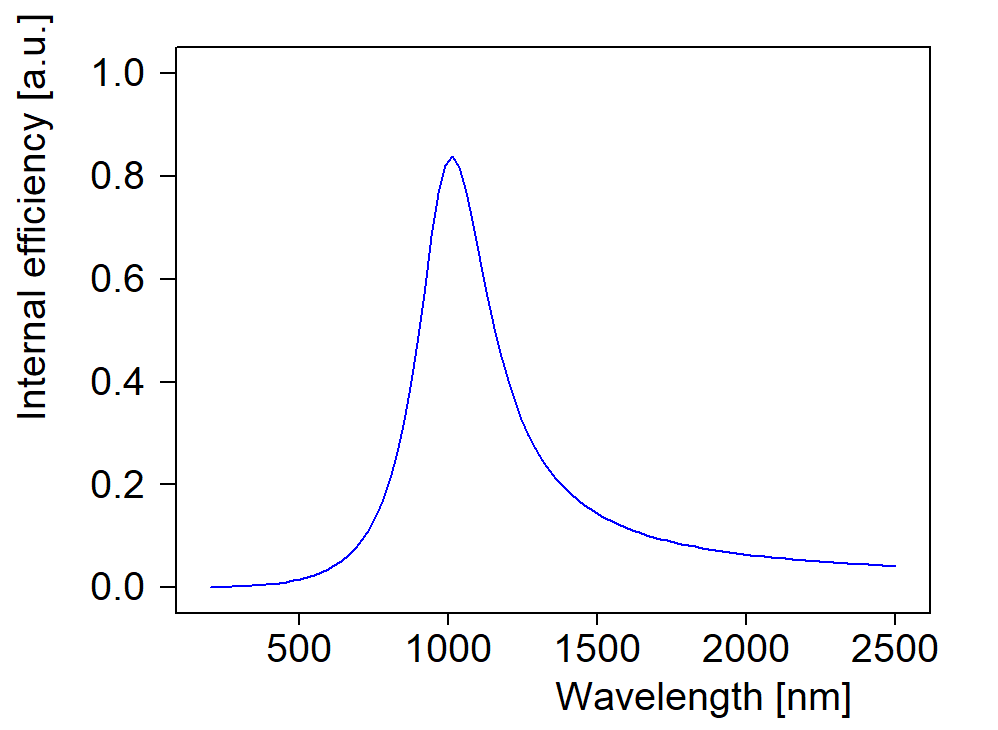

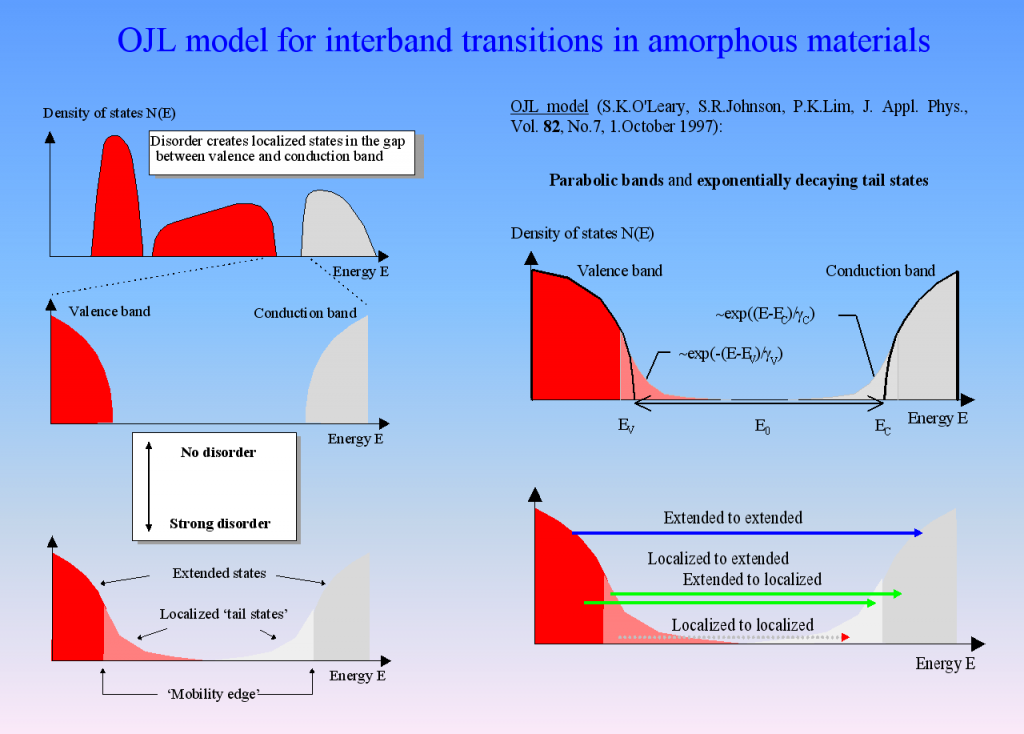

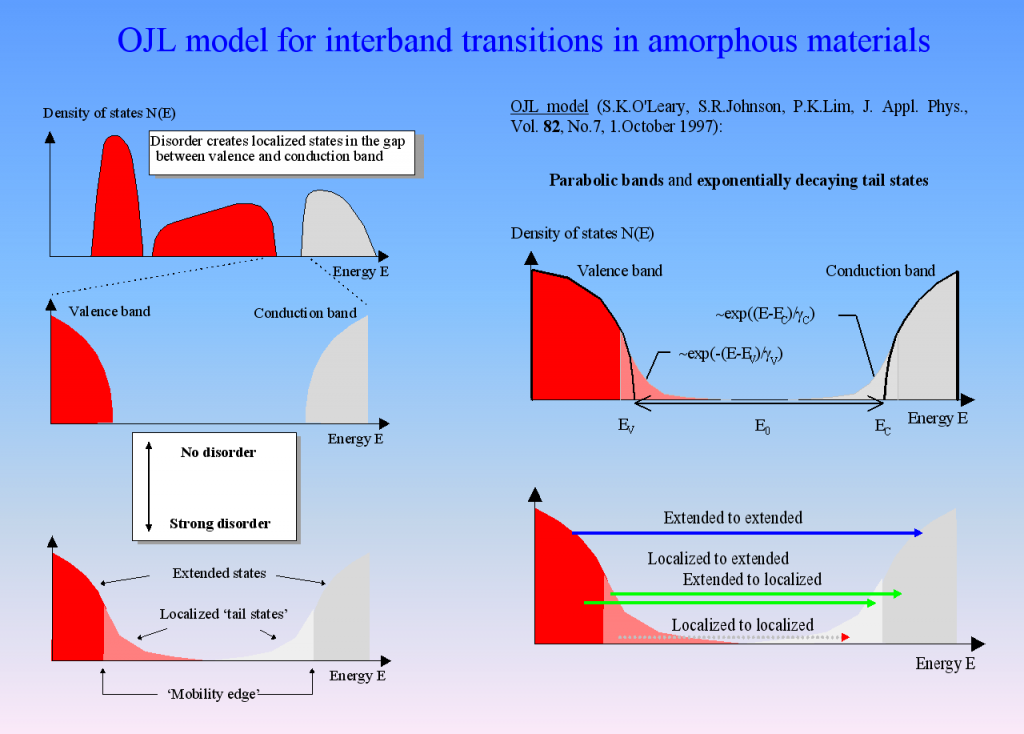

Amorphous semiconductors and almost all oxides and nitrides used in thin film applications can be described by the so-called OJL model. It is based on some very simple assumptions about the interband transition and states within the band gap which are caused by disorder:

For many materials the OJL model produces the correct shape of n and k with a few parameters only:

This makes fitting optical constants rather easy. In addition, the band gap parameter as well as the tail state exponent (also called ‘disorder parameter’) have physical meaning and characterize the state of the material.

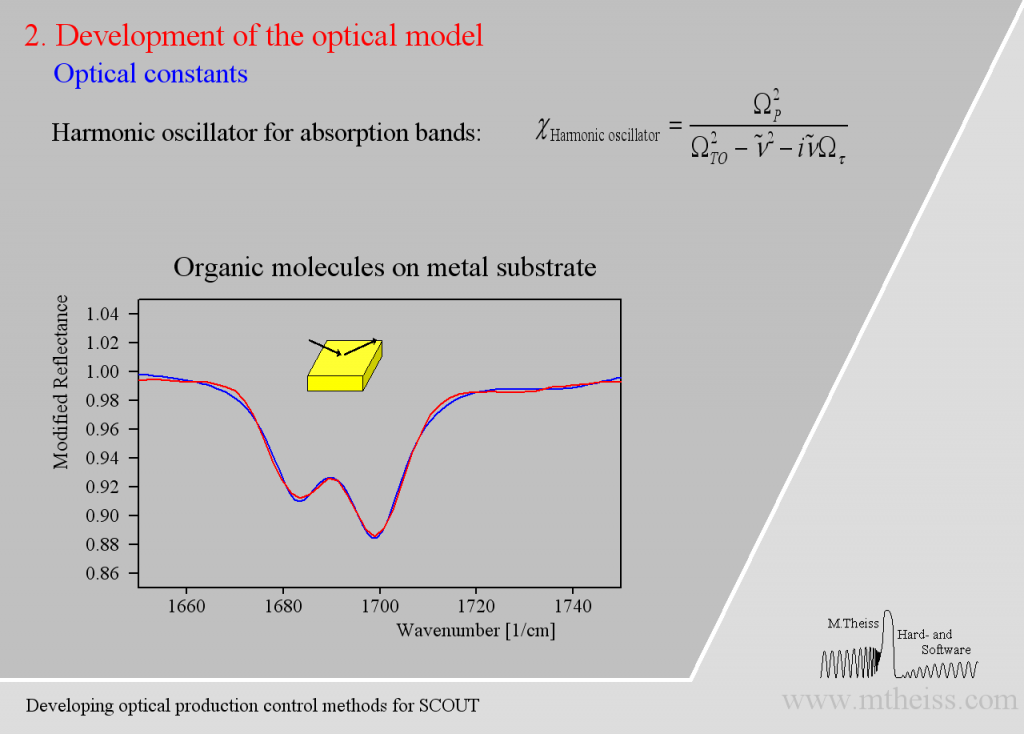

Vibrational modes

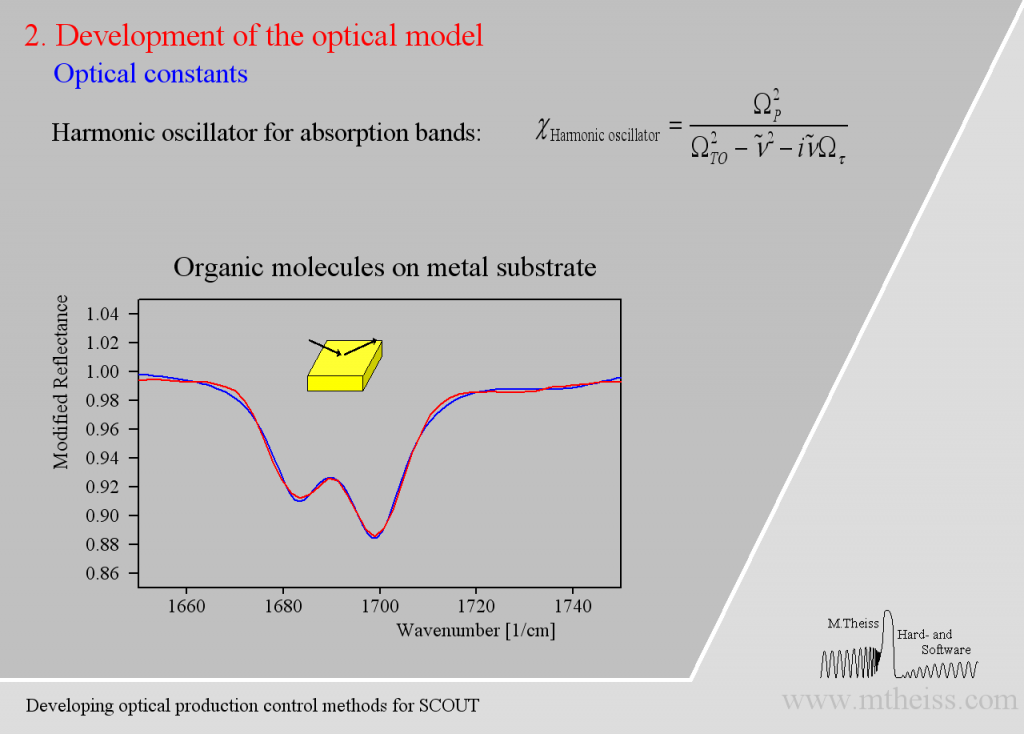

Although harmonic oscillators should be the right tool to describe vibrating parts of molecules or crystal lattices they are simplifying too much. Here is an attempt to describe 2 strong and a weak side band of molecules on a metal surface:

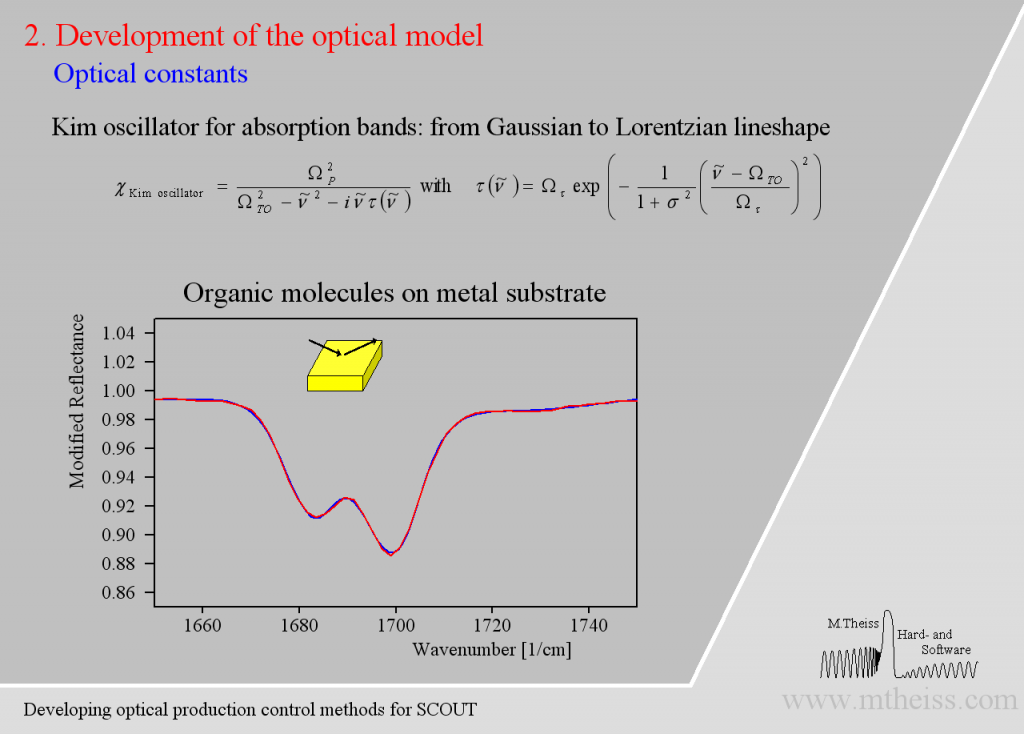

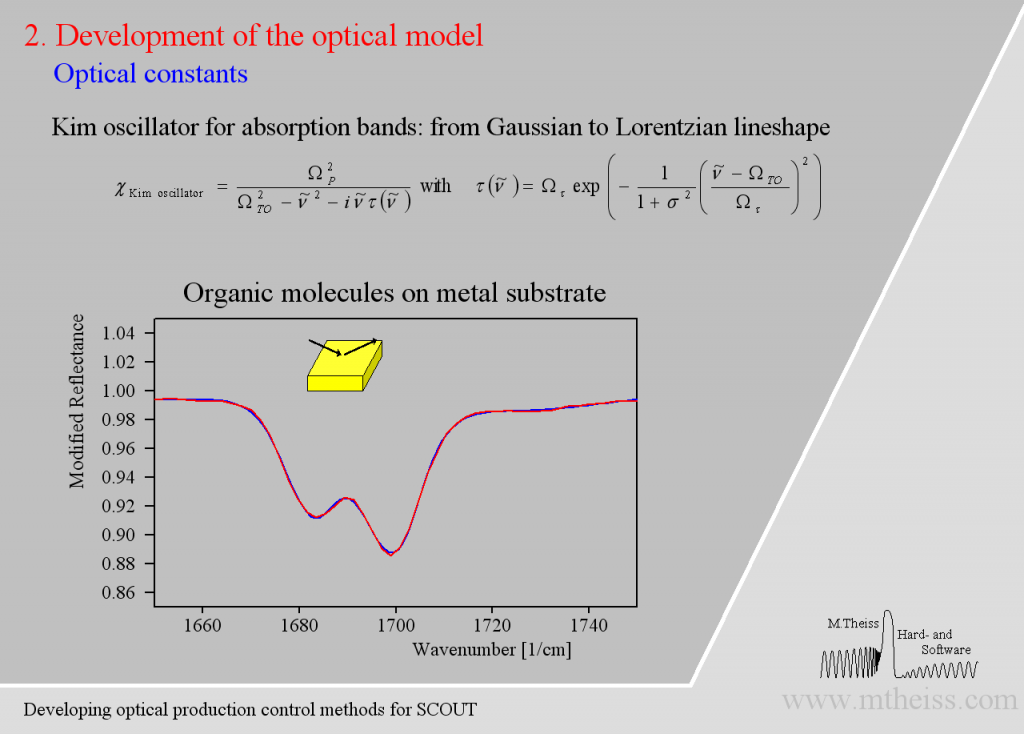

The model is ok but the overall shape of the absorption bands is not reproduced very well. The reason is that the harmonic oscillator has a constant damping parameter, i.e. the vibration is slowed down in the same way in the center (where the absorption is strong) as in the regions of small absorption. As the amplitude of whatever vibration we might have is certainly stronger in the resonance region the interaction with the outside world is certainly stronger. So whatever causes the damping will be stronger close to the resonance frequency and weaker far away from it – at least this is very likely. The so-called ‘Kim oscillator’ model uses a Gaussian decay of the damping with distance from the center of the oscillator and it fits many absorption bands very nicely, much better than the harmonic oscillator:

Besides describing vibrational modes the Kim oscillator can also model high energy interband transitions above the OJL model and the broad band absorption of confined charge carriers (which are almost free but are blocked to leave a microcrystal).

Free charge carrier excitation

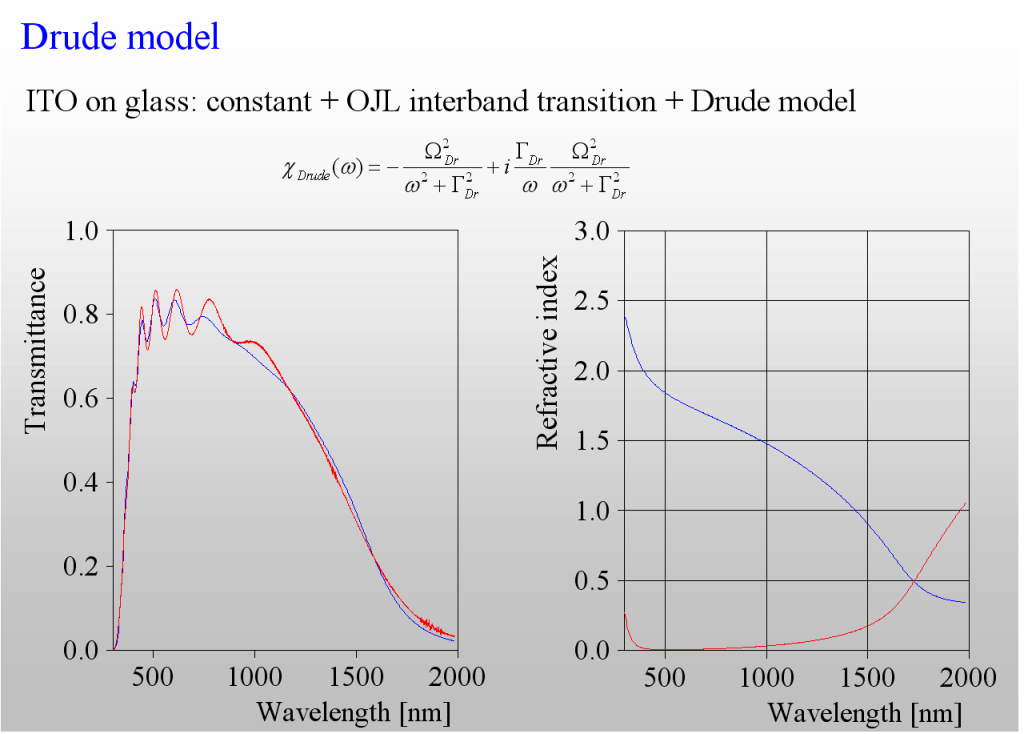

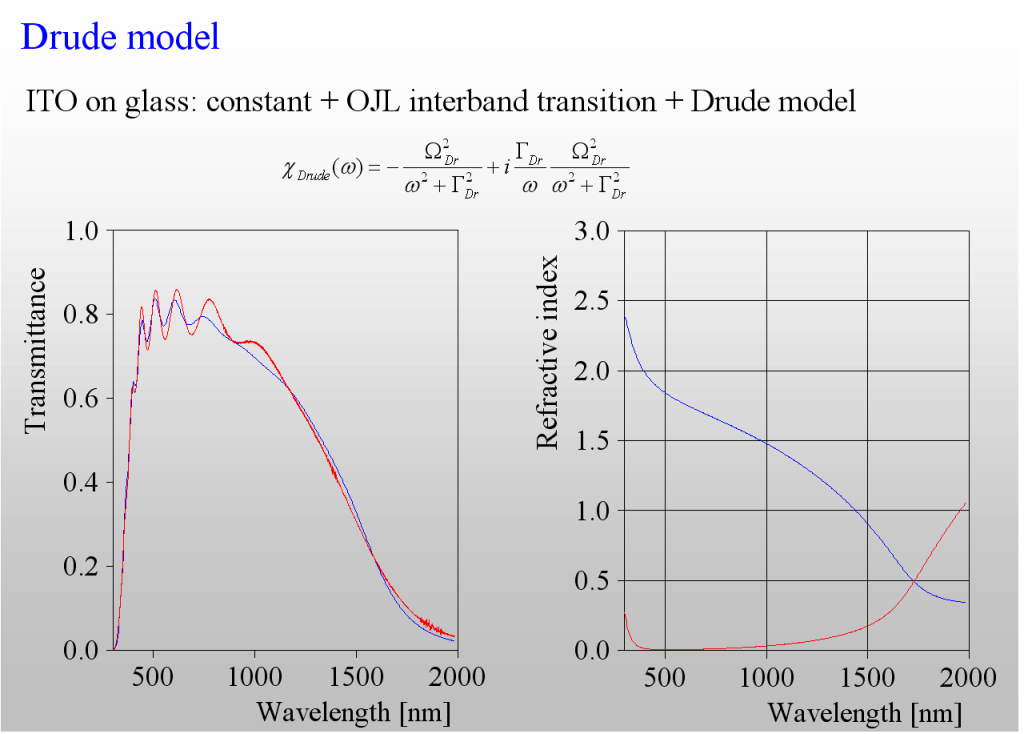

The absorption of light by the acceleration of free charges in a metal or doped semiconductor can be described by the classical Drude model. It has 2 parameters only – the plasma frequency is directly related to the concentration of charge carriers whereas the damping constant is connected to the mobility (or the inverse mean free path).

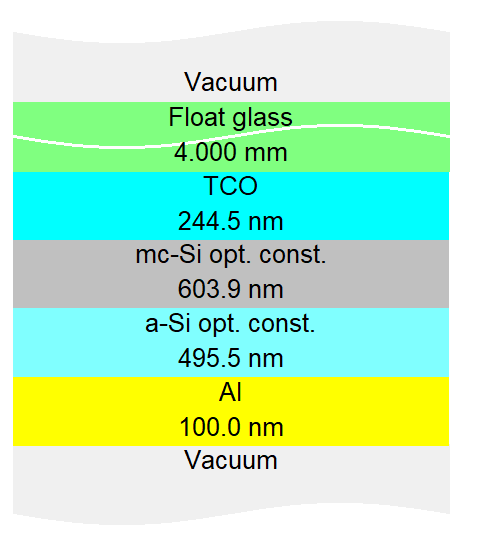

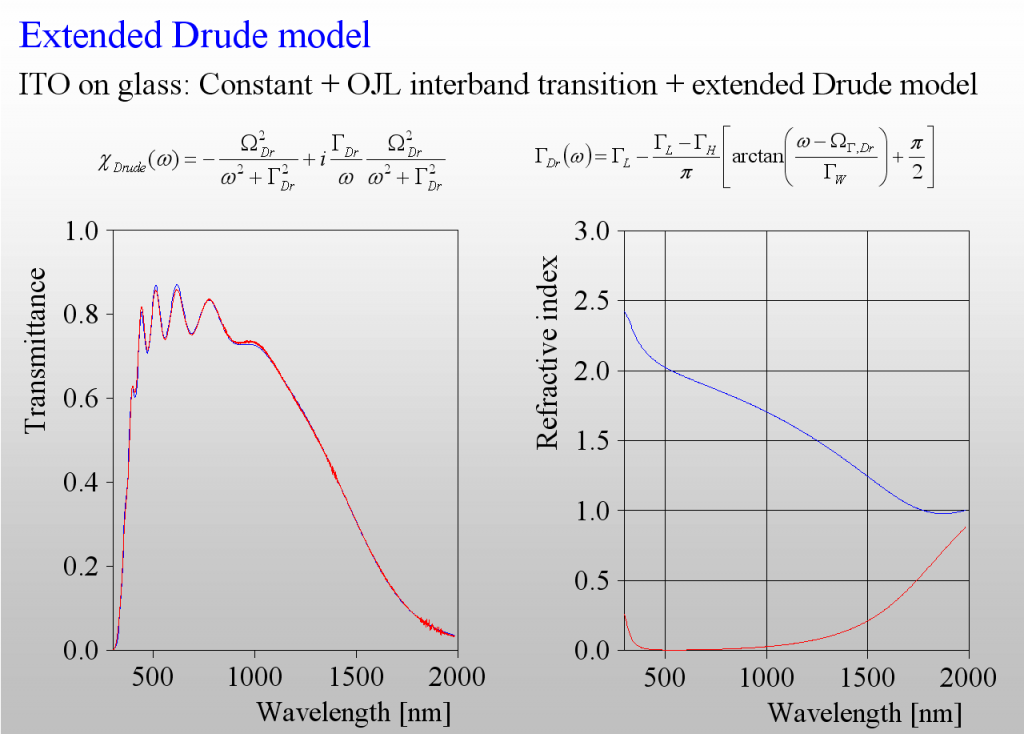

The only class of materials for which we need more than the Drude model is that of highly doped semiconductors, i.e. those materials which are used for transparent but conductive layers (TCOs). The following example shows the difference – the transmission spectrum of a ITO layer is not adequately reproduced by the (in this case too simple) Drude model:

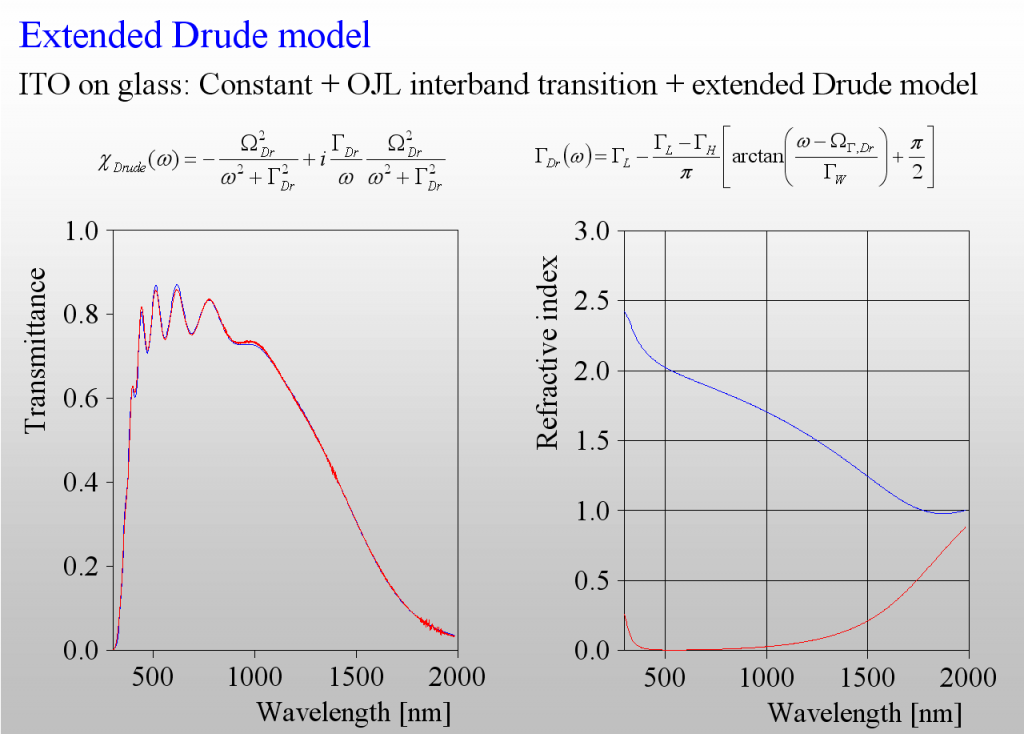

Again, like in the harmonic oscillator case, we need to introduce frequency dependent damping. As it turns out we need to have a smooth transition between 2 levels of damping – high in the mid infrared, and low in the near infrared:

The 3 extra parameters of this model describe the difference of the damping constants, the width as well as the spectral position of the transition zone.

For many applications TCOs need to be rather thick (a few hundred nm). Be prepared to face inhomogeneity in depth of such layers. In this case you need to use 2 or more sublayers which differ in the concentration of charge carriers.

Mixed materials

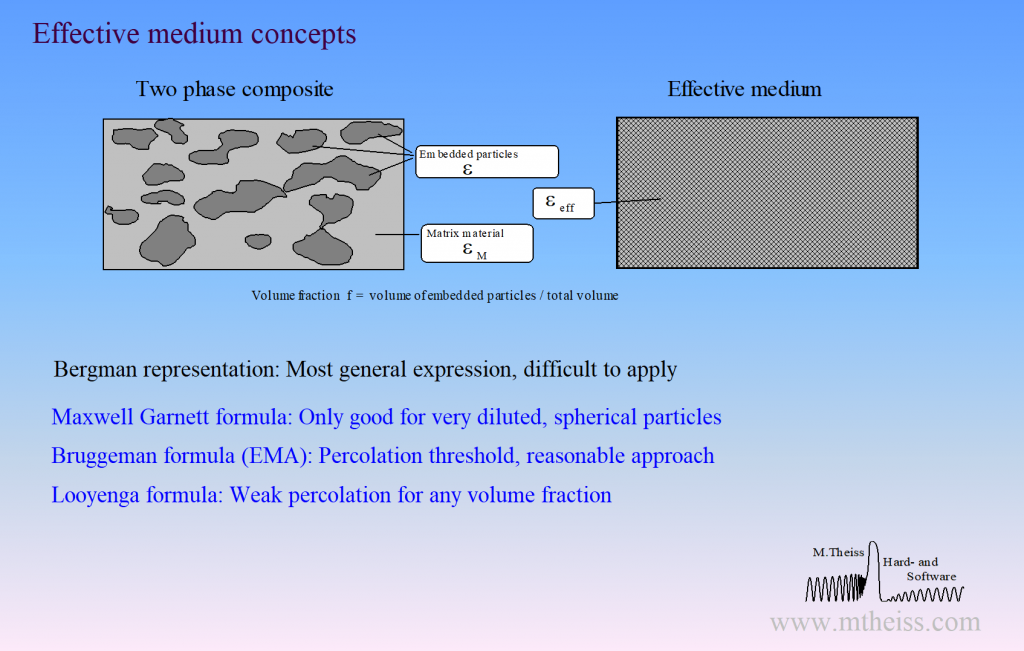

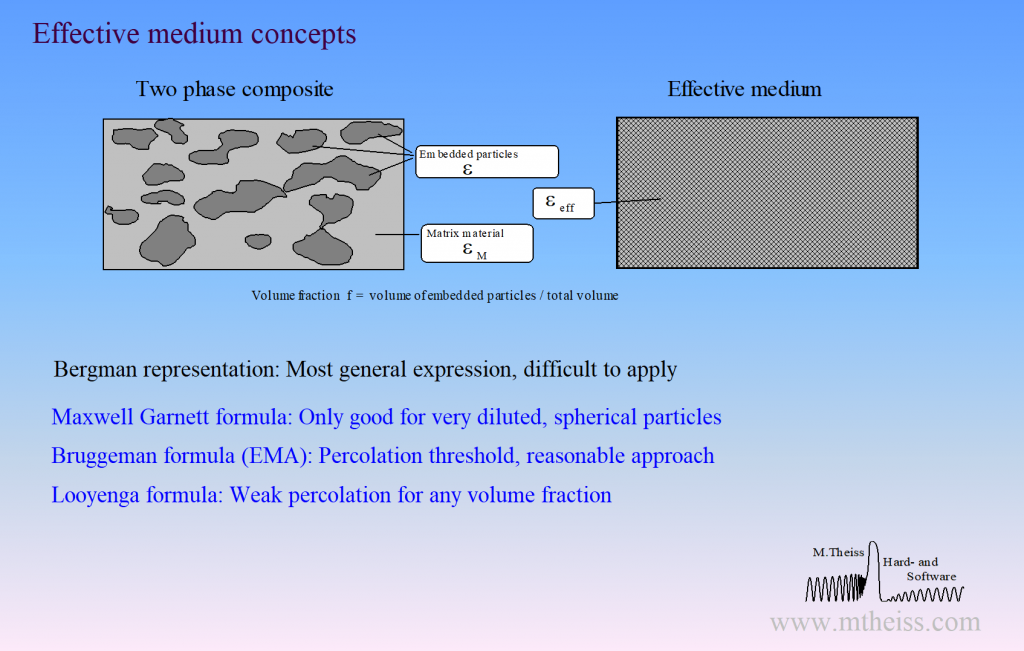

Surface roughness, porous materials or mixtures of 2 phases require the computation of effective optical constants.

Unfortunately, there is not a unique way of mixing optical constants. Our software packages have the following implementations for effective material properties:

- Bruggeman model (also known as EMA = Effective Medium Approximation)

- Maxwell Garnett model

- Looyenga model

- Bergman representation (very general, very powerful, but very difficult to apply). In fact, the Bergman representation includes all simple concepts, i.e. Bruggeman, Maxwell Garnett and Looyenga are special cases within the Bergman formalism.

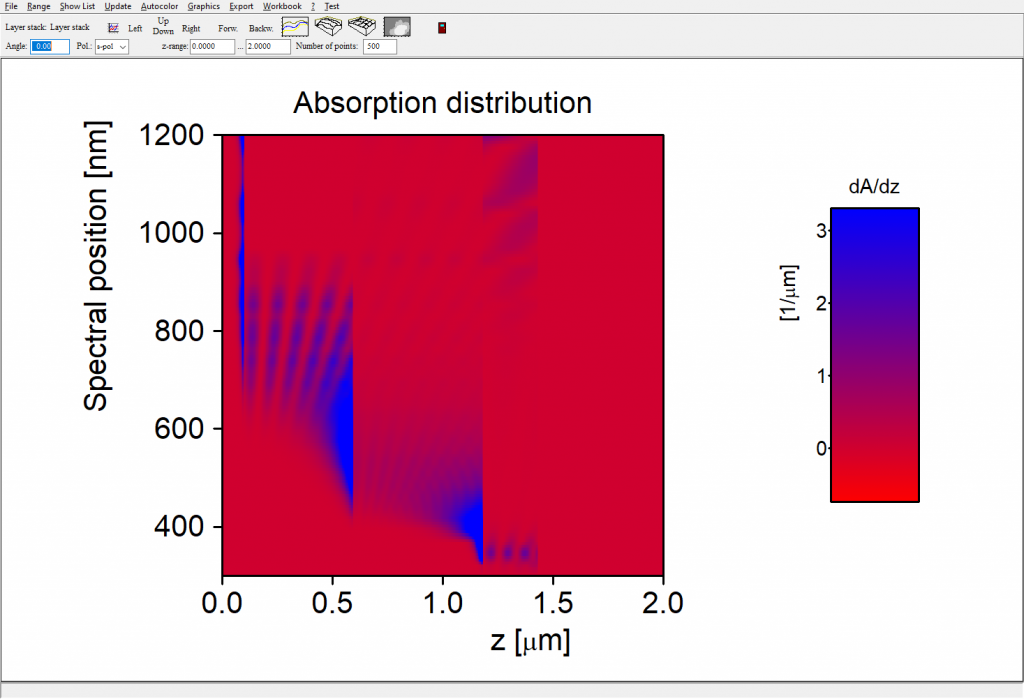

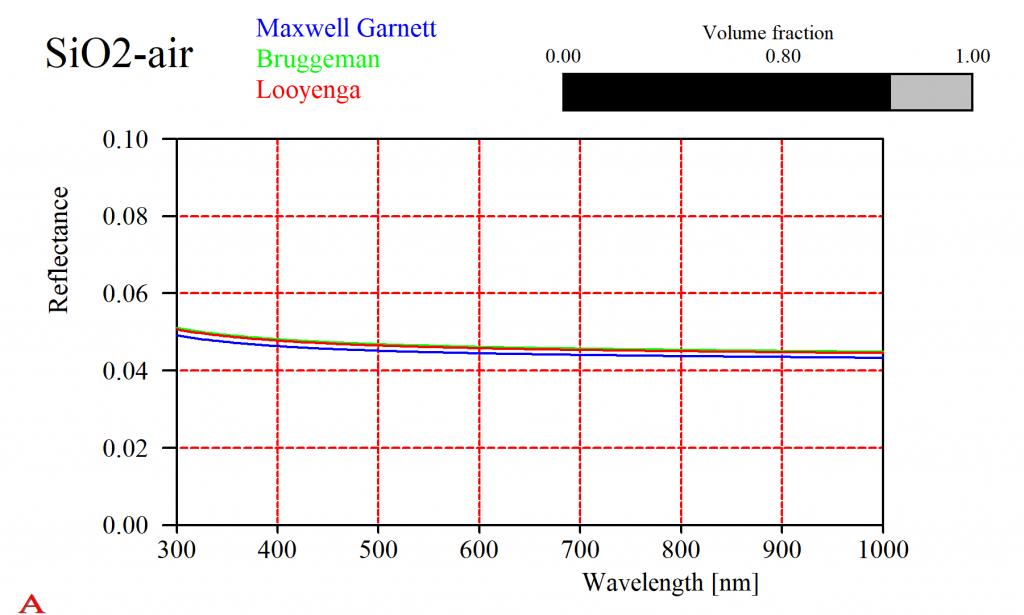

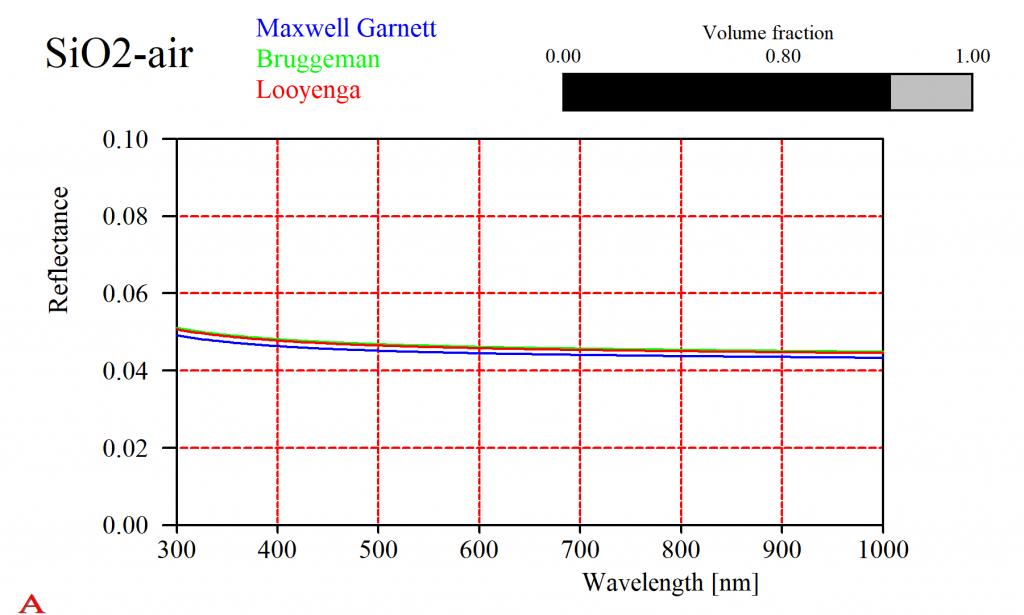

For some composites it does not really matter which approach you take. Here is the reflectance of a SiO2-air mixture, computed using the simple expressions given above:

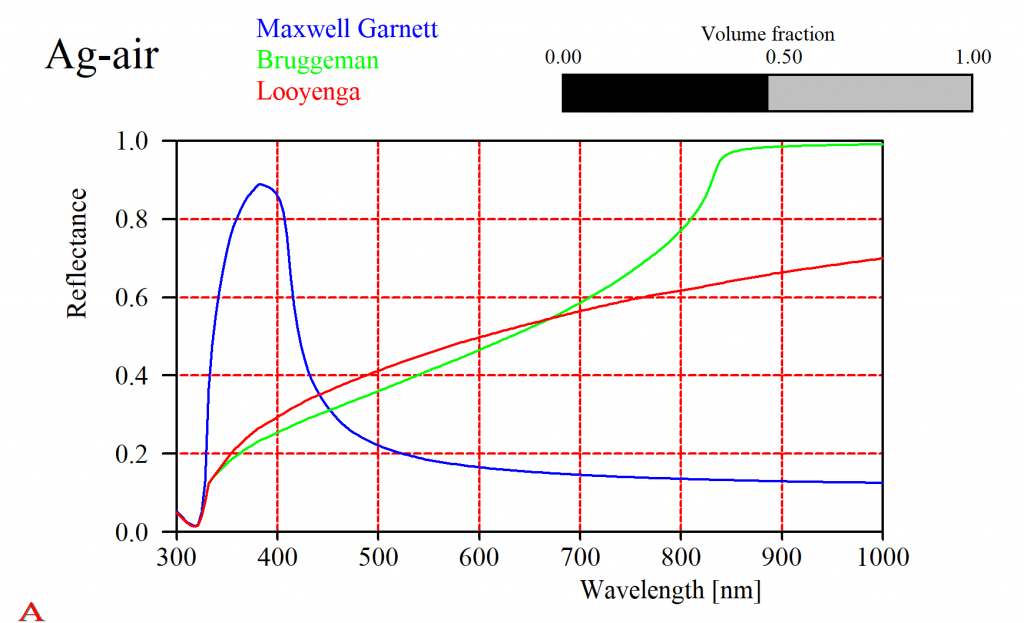

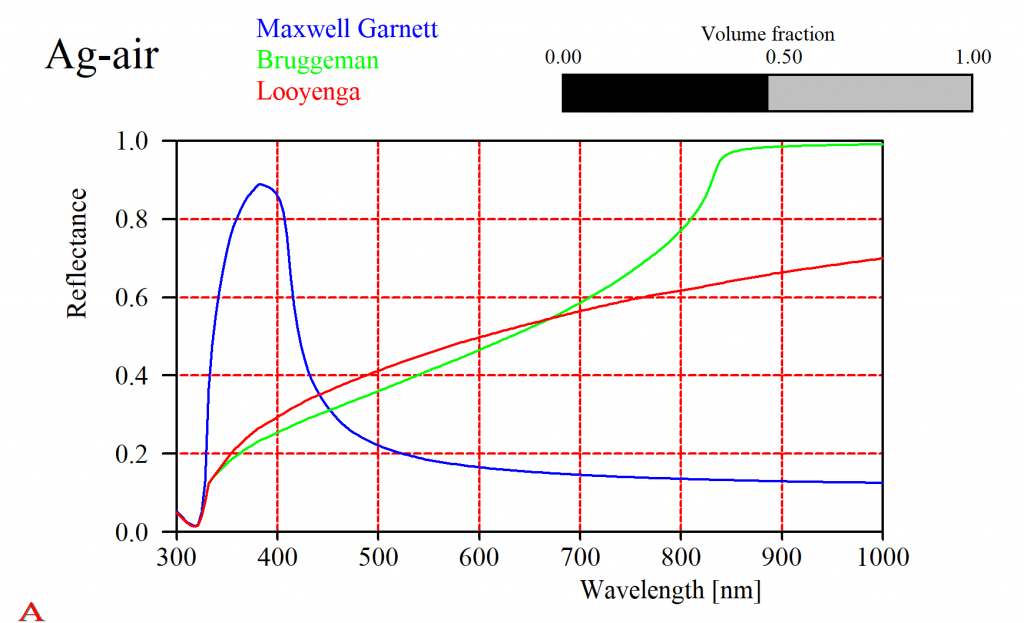

That should not lead to the wrong conclusion that this is always the case. Especially metals show a large variety of mixed properties. For a silver-air mixture the simple solutions give very different results (in fact, all of them will be quite wrong and you have to use the difficult Bergman approach):

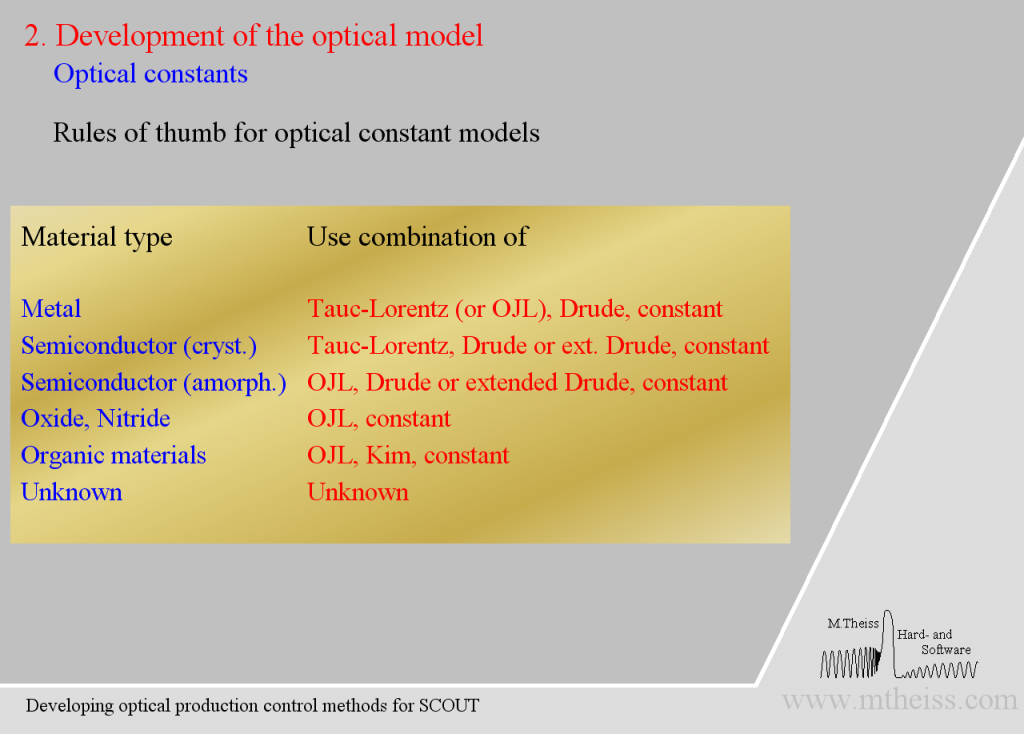

Which model should I use?

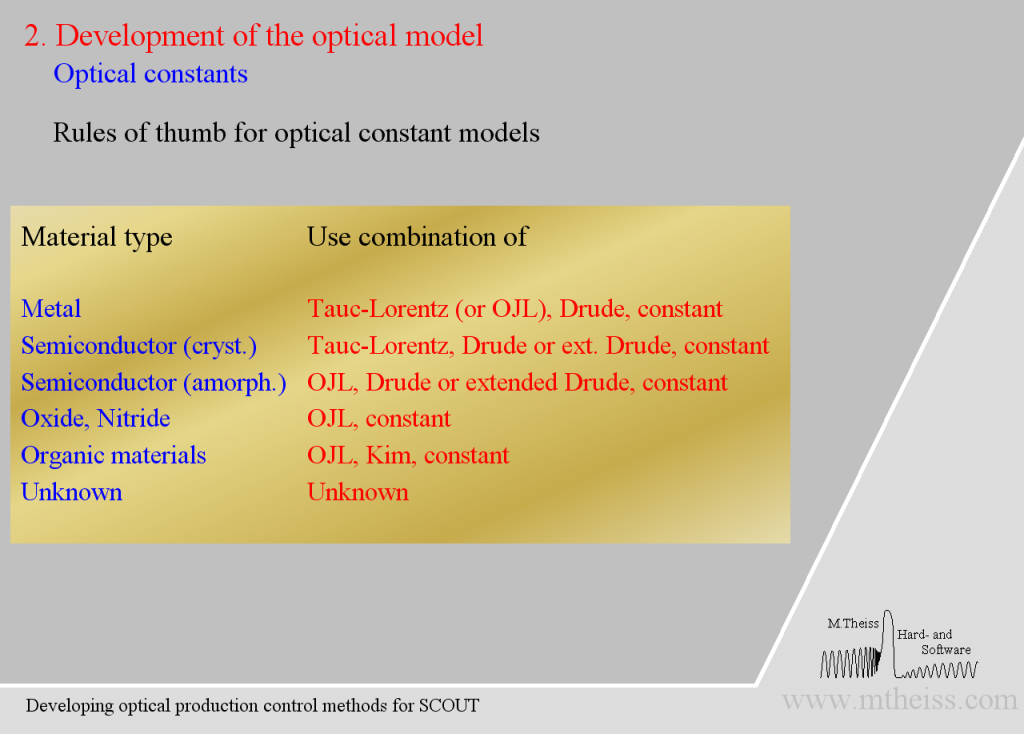

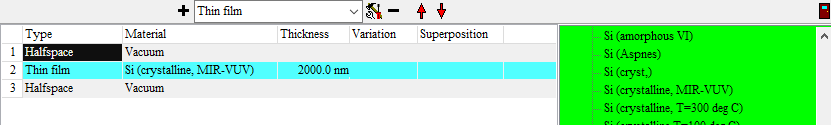

Finally, here are some recommendations which model to choose: